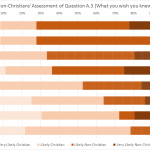

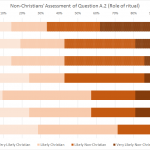

Christian H inspired the bonus question in this year’s Ideological Turing Test, and took home a prize for Miss Intellectual Congeniality when his honest Christian round entry turned out to be the one that atheist judges were most likely to rate as written by someone interesting enough to have a coffee-argument with. However, his fellow Christians didn’t recognize him as one of our own. He was the least likely real Christian to be recognized as such, and only 11% of Christian judges rated him as Very Likely Christian. His faux-atheist entry was also received skeptically.

He’s written a post outlining his strategy at The Thinking Grounds. Here’s an excerpt:

In writing my entries, I did what I did last year: I wrote a Christian entry that I largely agreed with, but I was conscious of adding more Christian-culture stuff than I normally would (in other words, I pretended it was something I wrote for fellowship and added more Bible stuff); and I wrote an atheist entry that was mostly what I think I would believe if I were an atheist. This last means that I write mainly my own opinion with all of the Jesus stuff excised and some stuff I stole from my atheist friends to paper over the gaps…

If this is what I’m doing–fiddling what I think anyway around so that it sounds atheist, or anyway doesn’t sound Christian–am I really participating in a Turing Test? Whether or not it is a sound strategy for winning this particular game is something time alone can tell (I suspect it isn’t, because I know perfectly well that the kind of atheist I would be does not look much like the kinds of atheists that populate Leah’s blog any more than the kind of Christian I am does not resemble the kinds of Christians that populate Leah’s blog) but I still wonder whether my tactics achieve the ends that the Turing Test is supposed to promote. The goal, as I understand it, is to prove that you really do understand your ideological opposition’s reasoning. That’s all well and good…but are atheists really my ideological opposition? I don’t think reality is anything so tidy. I agree with many of my atheist friends on a lot of matters, and I disagree with many of my Christian friends on the same matters. I might also say that social conservatives are the ideological opposition to my progressive beliefs more than any religious distinction, but I don’t really think “conservative” and “progressive” are coherent groups. Other, idiosyncratic distinctions are even more important to me: tentativeness/certainty, for instance, or altruism/self-interest, or capitalism/anti-capitalism.

So if I were to really participate in this Turing Test, I might be forced to write an atheist entry that opposes at least polyamory, and maybe euthanasia. That exercise would force me to really understand both social conservatism and atheisms other than the one with which I’m familiar.

This is actually close to the reason why I haven’t played after the first year. Obviously, I could now just write atheists entries that match the thinking of 2011!Leah (though, as was the case in 2011, atheists might take issue with the strains of Platonism). But it didn’t seem that fun or challenging to see how well I could do past!me. The first year, I was interested in seeing what I would have to change in my worldview in order to build up a relatively coherent Christian. I didn’t make too different a persona, because I was most interested in what was actually necessary to change.

A Turing Test can be challenging to try to figure out why someone might support a view you oppose. This would involve flipping at least some of your policy positions, so you’re arguing for something you disagree with. But you can also stick to your preferred prescriptions, and try to suss out why people might disagree with you on the reasons, but agree on the ultimate outcome. It might be fun to run an Arnold Kling-inspired three way test, between his three ideological axes of civilization vs barbarism, freedom vs coersion and oppressed vs oppresser. Especially if you stuck to your positions, but tried to see what it would feel like to support them in another framework. Would you change what you thought were the weak points or what compromises you were willing to make?

I think my goal, when playing Turing games, is getting to the point where you’re a little enchanted by a new language. What’s hard to express, and what flows naturally? How does the structure of the grammar/assumptions/etc shape what you can say and what you must say. So as long as you run up against something strange feeling, I’d guess you’ve learned something useful.