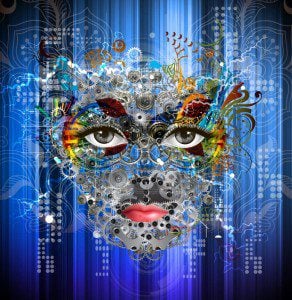

Is humanity the “biological boot loader for digital superintelligence,” as billionaire Elon Musk fears? The original source for Musk’s statement is a tweet where he writes: “Hope we’re not just the biological boot loader for digital superintelligence. Unfortunately, that is increasingly probable.” Musk, who funds SpaceX and Tesla, fears that artificial intelligence is “potentially more dangerous than nukes.” (See James Vincent’s article, “Elon Musk says artificial intelligence is ‘more dangerous than nukes,’” The Independent, August 5th, 2014)

Is humanity the “biological boot loader for digital superintelligence,” as billionaire Elon Musk fears? The original source for Musk’s statement is a tweet where he writes: “Hope we’re not just the biological boot loader for digital superintelligence. Unfortunately, that is increasingly probable.” Musk, who funds SpaceX and Tesla, fears that artificial intelligence is “potentially more dangerous than nukes.” (See James Vincent’s article, “Elon Musk says artificial intelligence is ‘more dangerous than nukes,’” The Independent, August 5th, 2014)

If Musk’s fears are “increasingly probable,” should we put a halt to advancing Artificial Intelligence before the human race becomes extinct? If we don’t, are we not digging our own graves and committing collective suicide? These questions appear to pop out from the pages of science fiction, which often raises huge philosophical and ethical questions. A response to my recent blog post “Cloning and Racism” got me thinking about Musk’s quote and the questions I raise in this article:

Humankind may well be, as Elon Musk puts it, the “biological boot loader for digital superintelligence.” In the future, extinct humanity will be regarded the Christ sacrificed, and living as The Spirit within religious machines. Can we have a binary amen? (“Isaac Edward Leibowitz”)

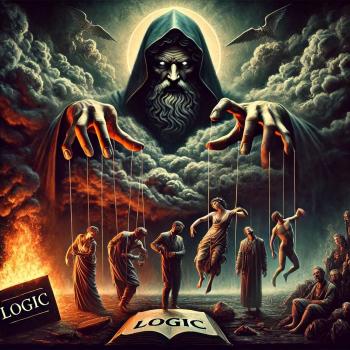

While some brave “souls” may be willing to venture a binary amen, Musk would not do so. Nor can I imagine philosophical minds like Immanuel Kant worshipping at AI’s shrine. Why not? And why shouldn’t they be? Do they have sufficient rational-moral grounds for resisting cardiac arrest in solidarity with the entire human race? How might Kant seek to address these questions? We will deal with other philosophical-ethical responses in a future post or two. In his Groundwork for the Metaphysics of Morals (edited and translated by Allen W. Wood, Yale University Press, 2002), Kant argued that we must approach every rational being as an end in him or herself, and not simply as a means:

But suppose there were something whose existence in itself had an absolute worth, something that, as end in itself, could be a ground of determinate laws; then in it and only in it alone would lie the ground of a possible categorical imperative, i.e., of a practical law.

Now I say that the human being, and in general every rational being, exists as end in itself, not merely as means to the discretionary use of this or that will, but in all its actions, those directed toward itself as well as those directed toward other rational beings, it must always at the same time be considered as an end. (Groundwork for the Metaphysics of Morals, Ak 4:428, page 45)

Such a rational being is “subject only to his own and yet universal legislation,” and is “obligated only to act in accord with his own will, which, however, in accordance with its natural end, is a universally legislative will.” (Ak 4:432; page 50) Kant is able to safeguard individuality and universality in this way in the development of his ethical framework. As rational creatures subject to universal laws that arise from their own persons, they serve as the basis for categorical imperatives for ethics.

Kant’s universal or categorical imperative is: “…act as if the maxim of your action were to become through your will a universal law of nature.” (Ak 4:421, page 38) He then goes on to test it in light of practical ethical problems, such as a person’s decision about whether or not to commit suicide. Here we come to the point of our present discussion in this blog post:

One person, through a series of evils that have accumulated to the point of hopelessness, feels weary of life but is still so far in possession of his reason that he can ask himself whether it might be contrary to the duty to himself to take his own life. Now he tries out whether the maxim of his action could become a universal law of nature. But his maxim is: ‘From self-love, I make it my principle to shorten my life when by longer term it threatens more ill than it promises agreeableness’. The question is whether this principle of self-love could become a universal law of nature. But then one soon sees that a nature whose law it was to destroy life through the same feeling whose vocation it is to impel the furtherance of life would contradict itself, and thus could not subsist as nature; hence that maxim could not possibly obtain as a universal law of nature, and consequently it entirely contradicts the supreme principle of all duty. (Ak 4:421-422; pages 38-39)

Now Kant sought to ground his convictions solely on rational grounds without recourse to religious claims as those found in the Bible (however, it is often claimed that Kant relies on the Christian heritage for certain ethical foundations). Kant intends his defense against suicide to be based solely on rational foundations (law of non-contradiction/categorical imperative) about furthering life, not destroying life, for rational creatures who are ends in themselves. Kant rejects that form of self-love that would entail suicide.

However, if the self-love at stake here entails securing a future for one’s own progeny, and if one secures such a future by one’s own death, how might Kant respond? Moreover, if humans are not the most rational creatures, and if we will eventually evolve into a higher life form such as Artificial Intelligence that alone can safeguard our legacy (for whatever reason), perhaps we are not really ends in ourselves. Perhaps then, we would be justified in committing collective suicide as a race in furthering AI’s technological advance.

Kant predated Darwin’s theory of evolution and those who claimed in light of it that humanity may not be the final and supreme form of intelligent life. While Darwin himself did not argue that the most rational beings will be those which necessarily survive (only those which are most biologically productive), others like Musk appear to maintain that digital super-intelligence may very well win out in the end. Some might even go further and maintain that digital super-intelligence will win out in the end; they may go far further and claim that since it will be the case (evolution of certain ‘superior’ rational species over against others) it should be the case (in other words, we should engage in engineering to advance such superior artificial intelligence).

The insertion of “should” suggests ethical considerations. Now some would argue that what is or will or can be the case does not necessitate that we should act to make it so. For example, some argue that although science has increasing capability to engage in genetic engineering, we should not engage in genetic modifications of such entities as fetuses on ethical grounds; for a helpful discussion on this topic, read “Genetic Inequality: Human Genetic Engineering”). Along similar lines, some might argue that while science may someday have the capacity to generate a digital super-intelligence that replaces humanity, we should not bring to pass such scientific advances based on ethical considerations. The question still is “Why?” Is humanity an end in itself? Why is it such an end? Because of a certain level of rational freedom or autonomy? But if in the end what we take to be the basis for rational freedom is simply a form of matter, what is the fundamental difference between humanity and machine?

Is the underlying difference simply a matter of flesh and blood vs. metal and silicon? Are we ultimately talking about biological vs. mechanical forms of consciousness or intelligence involving or lacking subjective awareness or self-awareness, as the case may be?* If this is the only difference, should we halt the development of digital super-intelligence? If so, will we need to factor in Kant’s moral or practical postulate of reason of the immortality of the human soul in the attempt to defend our stance?** If we decide not to halt the development, but believe that our only hope in safeguarding humanity’s immortality is through super-rational artificial progeny, we might as well get going with booting up the ultimate AI system and make the most of it while we still have the time.

_______________

*For an interesting discussion on this theme, see the section “Can a machine be conscious? Does having a brain matter?” in Richard Gross, Themes, Issues and Debates in Psychology, fourth edition (London: Hodder Education, 2014).

**See the discussion of Kant’s doctrine of the immortality of the soul in “3.6 The Practical Postulates” in “Kant’s Philosophy of Religion,” in the Stanford Encyclopedia of Philosophy.